Artificial Intelligence-A General Overview

You probably interact with AI during your daily life more than you realize that you do. Alexa, Siri, Google Home, all the advertisements that you see on the internet daily… They are all put up there and functioning with AI. With all of this AI tech shaping our daily lives, it is important know how they function.

Summary

We will discuss in order:

— Purpose of AI

— Different Types of AI

— Neural Networks

— Other Resources

Purpose of AI

The purpose of AI is to make a machines think like a humans. It is important that it thinks and interacts like a human because that is what feels easiest to us. We definitely do not want 100% accuracy, human error & shortcuts feel natural and more fluent.

That might sound odd at first, but let me give you an example so you get my point. Imagine asking your alexa, “Whats up?” If it responded in a perfect manner, you would get something like “So far my experience of this day that we are currently in is slightly better than average.”. Think about it! Imagine if your friend responded to you in that way. I would call the police! Fine, maybe not, but that would be really awkward. By making AI human, teaching it shortcuts, informality and stuff like that, you will get a response more like “Good, how bout’ you?”.

It is intuitive to think that 100% is always better, but humans are definitely not 100%, so why not make it more easy on ourselves and make our assistants “imperfect” just like us?

Another very common question is, why use intelligent machines at all? Instead of the AI assistants, replace it with a real human! Well, there are actually many advantages of AI over Human:

- Speed: Since AI runs on computers today they are so much faster than a normal human at doing tasks.

- Cost: You have to pay a human salary in order to make him/her do a task. Once you construct AI that is fit for doing your desired work, you don’t have to pay it a cent.

- Durability. Humans have limits while AI is literally a machine. If we wanted face-recognition software, you can put a machine that does that but no human will, or is capable of standing at a spot for the rest of their life and looking at the faces of every bypasser.

To sum it up, the purpose of AI is to substitute humans with a machine that is faster, stronger and cheaper.

Different Types Of AI

It is a mistake to think that the basis of all the AI that you encounter in your daily life is the same. There are different structures and recommendations for AI, each method shining in a different area.

- Decision Tree

A decision tree is a type of AI that makes decision based on the answered questions that lead to a tree of possibilities. By finding out the answers to the questions noted on the decision tree, the algorithm can decide on whether to play tennis or not based on human traditions (ex. tennis isn't played during a storm)

2. NLP (Natural Language Processing)

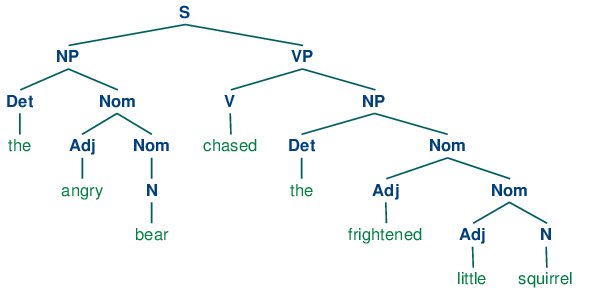

As you can see in the diagram above, the NLP algorithm can break down the sentence into a tree of hierarchy, and it kinda reminds me of order of operations in mathematics. In the very lower level of the tree where NLP is classifying adjectives/nouns/… the algorithm could have another type of AI incorporated, (ex. neural networks, which I will get to) to say whether a single word is a verb or a noun. Even though multiple types of AI could be used here, the general approach is called Natural Language Processing.

3. Neural Networks

I know that you were waiting me to mention the popular guy in town! In the modern day of artificial intelligence, neural networks are the field that tend to get the most attention because they perform so well. “But why do they tend to perform the best?” Lets try rewording that question. AI is good if it is acts and makes decisions like a human. Going off of that, we can turn that question into “But why do neural networks tend to be the most human most of the time?” I will get into that in the rest of the article, since it will be devoted to Neural Networks because of their modern day success.

Neural Networks

Neural networks have a relatively weird name, after all “Decision Tree” and “Natural Language Processing” make much more sense, they describe what they are. This is also the case with NN’s (Neural Networks), it is just not as obvious. NN’s got inspired from the structure of the human brain (that’s the reason they generally are the most similar to humans — or the “best”). In the human brain individual neurons (nerve cells) connect with others which make a shape like a neural network that we use today!

Lets get into the technical part of these brain-simulators. The mechanics of NN’s are split into 2 general processes. One of them is forward propagation, the other is backward propagation. As a summary, forward propagation is predicting the result, and backward propagation is learning. All of this will make more sense in a moment, trust me.

- Forward Propagation

To understand forward propagation, furthermore neural networks as a whole, we need to know what an individual neuron does in a neural network.

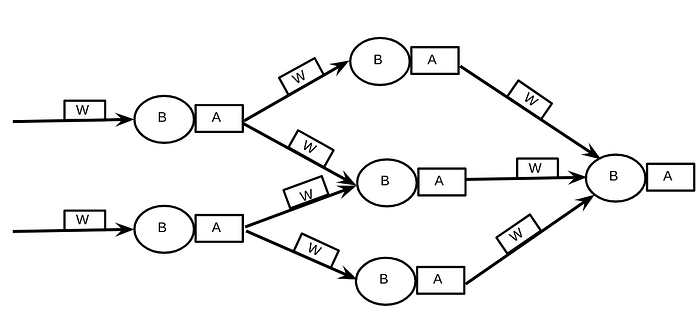

First let us agree upon the vocabulary used to express a NN. The lines are edges, or connections between neurons in the network. The big circle in the middle is called a node.

Lets see whats going in here. The input, X, travels down the first edge. That edge has a weight, named W. the input x gets multiplied by W. Soon after that that number, W * X enters the node, which adds the bias, B, to W * X. Now we have W * X + B. That number enters the activation function, A. The activation function “compresses” W * X + Y. More on them later. The output, Y, for this individual “neuron” will eventually be either the input for another neuron, or one of the outputs of the whole network.

In the above example I used one input and one output for each neuron in the name of simplicity and understanding, but there can be multiple of all of them. With what we just learned, this is what a neural network looks like to us. I would recommend taking a moment to make sure all of this makes sense.

2. Backward Prorogation

This is the core part of what we call machine learning. Before we get into the technical part, let me explain what we mean by learning. When a neural network learns what we do it is we tell it the difference between the output that it “predicts” and the correct answer. This is called the loss. Using that “feedback”, it updates all of the weights and biases inside it using the back propagation algorithm so that the next time we ask it to predict something, it is a tad bit closer.

Uh Oh. Here comes the math! Get yourself out of that mentality. Anything that intimidates you, you will not be able to learn until you are not afraid of it.

At first it is a very common question on how we update the weights and biases. Which ones do we know to change? How do we know how much to increase/decrease them? Well, you have come to the correct place my friend.

With neural networks we do not have to know which ones to change, we have to know how much to change each one. When the amount we change one of the weights/biases is very close to 0, we just say it didn't get changed. Enough with what happens, lets dive into the how.

Each weight/bias even if insignificant, has an impact on the output of the whole network. The first step we take is to find out how big the influence of each of these biases/weights is relative to the final output. We take the partial derivative of the weight/bias relative to the loss of the final output to find how much it contributes to the loss. We multiply the derivative with the learning rate (a decimal number between 0 and 1, preferably 0.02 or something like that) so that the NN does not update the weights/biases too viciously. If the learning rate is too big, then the weights and the biases will swing to a negative number, then positive, then negative… like a pendulum. We don’t want that it will take way too long to train the network. With a lower learning rate we steadily approach the most optimal value for the weight/bias.

Congrats! You have made it through the difficult part!

3. Activation Functions

Activation functions are what we use to edit the output of a single node one last time. Here are the 2 most popular activation functions:

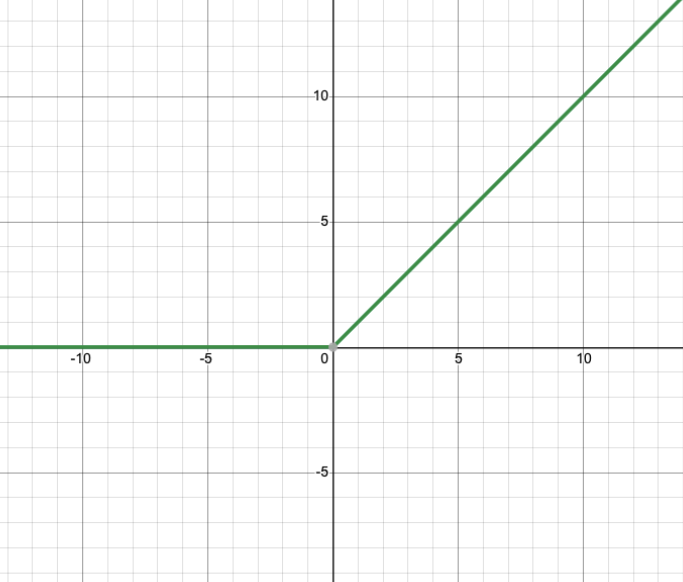

- ReLU (Rectified Linear Unit)

This function takes in the value of its corresponding node, and takes the maximum between it and 0. Here’s the graph for the ReLU function:

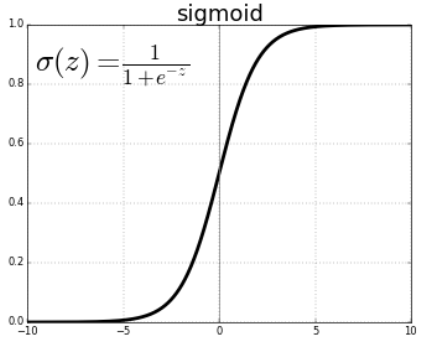

2. Sigmoid

Sigmoid is just another activation function like the ReLU, it just looks different. Its job is to compress any given number to it to a decimal number between 0 and 1.

Extra Resources

The following are absolutely awesome resources that I used to educate myself on neural networks:

- 3Blue1Brown NN series (Awesome animations & explanations) :

- Andrew Ng’ s coursera on deep learning ( Top educator, dives deep into the technical mathematics! ): https://www.coursera.org/learn/machine-learning

Hope this article has added at least some value to you and that you have enjoyed!!! 😄

Sources:

- https://www.coursera.org/learn/machine-learning

- https://www.youtube.com/watch?v=aircAruvnKk

- https://www.geeksforgeeks.org/decision-tree/

- https://www.nltk.org/book/ch08.html

- https://developer.oracle.com/databases/neural-network-machine-learning.html

- https://www.freecodecamp.org/news/want-to-know-how-deep-learning-works-heres-a-quick-guide-for-everyone-1aedeca88076/

- https://deeplearninguniversity.com/relu-as-an-activation-function-in-neural-networks/

- https://saugatbhattarai.com.np/what-is-activation-functions-in-neural-network-nn/